Language Testing in Austria: Taking Stock / Sprachtesten in Österreich: Eine Bestandsaufnahme

Summary

Das Buch blickt auf zehn Jahre professioneller Testentwicklung und Sprachtestforschung in Österreich zurück. Teil I beschreibt die Entwicklung von Testsystemen auf allen Ebenen des Bildungssystems. Die Dokumentation umfasst Deutsch, das für die große Mehrheit Unterrichtssprache ist, die sogenannten modernen Fremdsprachen Englisch, Französisch, Italienisch und Spanisch sowie die klassischen Sprachen Latein und Altgriechisch. Teil II umfasst eine beachtliche Anzahl von Untersuchungen, die während und nach der Entwicklung und Implementierung der neuen Prüfungsphilosophie in den letzten zehn Jahren durchgeführt wurden. Die Untersuchungen werden in ein Validierungskonzept eingeordnet, das als Orientierung für zukünftige Sprachtestforschung in Österreich dienen könnte.

Excerpt

Table Of Contents

- Cover

- Title

- Copyright

- About the author

- About the book

- This eBook can be cited

- Contents

- Acknowledgements

- Introduction (Günther Sigott)

- Part I: Test Development

- I.1 National Educational Standards Tests

- Bildungsstandardüberprüfung Deutsch: Kompetenzmodelle und Aufgabenentwicklung (Sandra Eibl / Silke Schwaiger / Simone Breit)

- Die Umsetzung der kriterialen Rückmeldung in der Bildungsstandardüberprüfung am Beispiel Lesen in Deutsch für die vierte und achte Schulstufe (Claudia Luger-Bazinger / Ursula Itzlinger-Bruneforth / Claudia Schreiner)

- The development of the Austrian Educational Standards Test for English Listening at grade 8 (Claudia Mewald / Dave Allan / Andrea Kulmhofer)

- The development of the Austrian Educational Standards Test for English Reading at grade 8 (Klaus Siller / Andrea Kulmhofer)

- The development of the Austrian Educational Standards Test for English Speaking at grade 8 (Claudia Mewald)

- The development of the Austrian Educational Standards Test for English Writing at grade 8 (Andrea Kulmhofer / Klaus Siller)

- I.2 National School-Leaving Exam (Matura)

- Deutsch als Unterrichtssprache: Das Konzept der schriftlichen Reife- und Diplomprüfung (Jürgen Struger)

- Die Standardisierte Reife- und Diplomprüfung (SRDP) Deutsch als Unterrichtssprache: Methodenkonzept und Hauptergebnisse der Feldtestung (Hermann Cesnik / Günther Sigott)

- Delivering reform in a high stakes context: from content-based assessment to communicative and competence-based assessment (Carol Spöttl / Kathrin Eberharter / Franz Holzknecht / Benjamin Kremmel / Matthias Zehentner)

- Ensuring sustainability and managing quality in producing the standardized matriculation examination in the foreign languages (Theresa Weiler / Doris Frötscher)

- Entwicklung von Testsystemen in den klassischen Sprachen (Fritz Lošek / Peter Glatz / Hermann Niedermayr / Irmtraud Weyrich-Zak)

- Aspekte der Implementierung der neuen Testsysteme in den klassischen Sprachen (Michael Sörös / Martin Seitz / Renate Glas / Walter Kuchling / Renate Oswald / Andrea Lošek)

- I.3 Tertiary-Level Language Testing

- Developing rating instruments for the assessment of Academic Writing and Speaking at Austrian university English departments (Armin Berger / Helen Heaney)

- I.4 German as a Foreign Language

- Österreichisches Sprachdiplom Deutsch (Manuela Glaboniat / Carmen Peresich)

- Part II: Language Testing Research

- II.1 National Educational Standards Tests

- Beurteilerübereinstimmung und schwer zu beurteilende Texte im Vergleich Training und Bildungsstandardüberprüfung in Deutsch auf der vierten Schulstufe (Roman Freunberger / Simone Breit / Marcel Illetschko)

- On the discrepancy between rater-perceived and Empirical Item Difficulty. Standardsetting for the receptive skills in the Austrian Educational Standards Tests for English (Günther Sigott / Hermann Cesnik)

- The role of error in assessing English Writing in the Austrian Educational Standards Baseline Test (Florian Pibal / Günther Sigott / Hermann Cesnik)

- The washback of standardised testing in the subject English at lower secondary level (Claudia Mewald)

- Cognitive processes as predictors of item difficulty in the Austrian Educational Standards Baseline Test for English Reading at grade 8 (Klaus Siller / Ulrike Kipman)

- II.2 National School-Leaving Exam (Matura)

- Analyzing the 2016 standardized matriculation exam results in the foreign languages: post-test analysis (Doris Frötscher / Michael Themessl-Huber / Theresa Weiler)

- Matura washback on the classroom testing of Reading in English (Doris Frötscher)

- How do test takers write a blog? A corpus-based study of linguistic features in performances based on the task type Blog (Michael Maurer)

- Reading strategies in the standardized Austrian matriculation examination for English (Franz Holzknecht)

- Validating multiple choice Language in Context items (Kathrin Eberharter)

- Evaluating the effectiveness of a training program for double-raters (Kathrin Eberharter / Franz Holzknecht / Benjamin Kremmel)

- Examining the effect of partner choice in peer-to-peer assessment (Matthias Zehentner)

- Annäherung der Transformationsfunktion von Logit Measures auf Fair Measures im Multifacetten-Raschmodell (MFRM) durch logistische Regression. Eine Simulationsstudie (Hermann Cesnik)

- II.3 Tertiary-Level Language Testing

- Rating scale validation for the assessment of spoken English at tertiary level (Armin Berger)

- Operationalizing expeditious reading strategies in tests of English as a foreign language (Helen Heaney)

- A criterion-referenced approach to the Vocabulary Levels Test: validating form B of the 2000 and 3000 word levels, and the Academic Wordlist (Hans Platzer)

This book is the result of a collaborative effort. I would like to thank all contributors for making this volume possible. Their support and cooperation during two years of intensive work were exceptional, delightful and refreshing. I am grateful to Christina Thamer for her valuable help in the editing process. I would also like to thank Rüdiger Grotjahn and Claudia Harsch for undertaking the time-consuming task of reviewing all thirty-one contributions. My particular thanks go to my long-standing colleague Hermann Cesnik for sharing his vast expertise in statistical methods, psychometrics and beyond, his excellent support in IT matters, his absolute reliability, devotion to the cause, and his friendship throughout the entire project. Thank you, Hermann. ← 9 | 10 →

This volume marks a decade of modern language testing in Austria. Part I describes developments at all levels of the educational system ranging from elementary school to university level. Part II aims to chart the language testing research territory by bringing together a considerable amount of research that has accompanied and followed national exam development and reform since 2007, thus testifying to the existence of an important and active language testing community in Austria.

1. The situation before the reform

Since 2007 the language testing scene in Austria has changed enormously. In the time before, basic tenets of language test development were, at best, present in the isolated expertise of individual researchers or dedicated language teachers scattered in institutions all over the country. Language testing as an academic discipline was not established and people attempting to establish language testing as a worthwhile field of enquiry within the academic landscape in Austria were seen as exotic. This is not to say that language competences were not assessed. Like in most other countries, there had been a time-honoured tradition of assessing pupils’ and students’ language achievements towards the end of compulsory schooling at grades 8 or 9, when pupils are between 14 and 15 years of age, in the form of a school certificate. At the end of upper secondary level, when students were at age 18 or 19, their achievements were assessed in a high-stakes examination, the so-called Matura in academic and vocational secondary schools. For those who had chosen to study a philological subject other than their mother tongue, language achievement or proficiency was assessed at the end of their university studies. Questions relating to the reliability or validity of these examinations were rarely asked.

In the first decade of the 21st century, it gradually became clear that the traditional examination procedures were becoming more and more anachronistic. The Common European Framework of Reference (CEFR) for Languages had been around for years and language proficiency requirements and achievements were increasingly being described with reference to the six levels of the CEFR. By 2007, ← 11 | 12 → the situation concerning high-stakes language examinations in Austria gave rise to questions like the following (Sigott 2007:160):

- Does the exam aim to test the things which it should test?

- Is it sufficiently clear what the exam is actually testing?

- Does the exam test the same things in all the schools or university departments?

- Does / will the exam test the same things every year?

- Are parallel forms of the exam available?

- Are results comparable from year to year?

- Is it clear how the exam relates to the CEFR?

- If the answer to any of the above questions is YES: What evidence is there?

Answering the first question was difficult because at both the end of secondary level and at the end of tertiary level the desired outcomes had not been described in sufficient detail, presumably because there was a lack of agreement as to what they should be. The second question goes to the heart of test validity, and requires evidence, which, in turn, presupposes a detailed description of the test constructs that constitute the basis for the tests in question. But such evidence was not available in 2007. Given the negative answers to the first two questions, it could safely be assumed that the answer to question three was also negative. After all, if there are no commonly agreed-upon test specifications, coordinating exam content and method is practically impossible. The obvious conclusion was that neither Matura nor B.A. exit examinations were likely to test the same skills or competences across schools or modern language university departments, respectively. For the same reasons, it was unlikely that the test constructs remained stable from year to year, which meant a negative answer to question four as well. In the absence of test specifications and calibrated item banks it was not to be expected that parallel forms of either Matura or B.A. exit examinations existed, which means a negative answer to question five. This indicated that neither exam content nor exam difficulty were likely to be stable over the years in both examination contexts, which suggests a negative answer to question six. Obviously, the prerequisites for attempting to link the examinations to the CEFR were not met and also question seven had to be answered negatively. Given this state of affairs, the conclusion I reached in 2007 read as follows:

Since the answers to the above questions have been negative, there is no need to approach question eight. In fact, there is no relevant evidence that would justify a positive answer to any of the seven questions. Changing the answers from negative to positive is a big challenge and sets the agenda for language testing in Austria (Sigott 2007:161). ← 12 | 13 →

The recognition of this state of affairs led to reactions at all levels in the educational system. Educational standards testing was introduced at the end of primary school and at the end of the lower secondary level. At the end of upper secondary level, a new centralised Matura exam was implemented, and attempts were made to standardise the testing of achievement at university exit level in the language subjects. These developments are described in the contributions to Part I.

2. Exam reform projects: Educational Standards, Matura, ELTT Initiative

The first decade of the 21st century saw a growing interest in descriptions of learning goals in the language subjects. This was helped by the availability of the level descriptors of the CEFR, which were seen as welcome formulations of teaching targets for foreign languages, particularly English. Educationists, politicians and practitioners started talking about competence-based teaching. Eventually, policy makers propagated the idea of measuring attainment of these targets for the purpose of system monitoring (Lucyshyn 2004; 2007). This led to the introduction of the National Educational Standards (Bildungsstandards) in the core subjects German as a first language, Mathematics, and English as a foreign language. Once teaching targets had been formulated, it was decided to monitor attainment of these targets by means of standardised testing systems and to provide feedback to stakeholders, both at individual and at institutional levels. Accordingly, educational standards tests were developed to test achievement in German as a first language and in Mathematics at the end of primary school, and for German as a first language, Mathematics and English at the end of the lower secondary level. The tests for German as a first language cover reading and writing at both the end of primary school and at the end of the lower secondary level while English at lower secondary level is tested in the four skills of listening, speaking, reading and writing. A new institution, BIFIE (Bundesinstitut für Bildungsforschung, Innovation und Entwicklung des österreichischen Schulwesens) was created and put in charge of exam development with professional support from the universities of Vienna and Klagenfurt.

The contributions in Part I are arranged according to the level in the educational system that they refer to. The competence models for L1 German in the National Educational Standards and their operationalisation as test tasks and items are described by Eibl, Schwaiger and Breit. This is followed by a report by Luger-Bazinger, Itzlinger-Bruneforth and Schreiner on the development of a procedure for providing criterion-related feedback to individuals and to institutions, ← 13 | 14 → taking L1 German reading as an example. For English, the test constructs and their operationalisation are described by Mewald, Allan and Kulmhofer (Listening), Siller and Kulmhofer (Reading), Mewald (Speaking) as well as Kulmhofer and Siller (Writing).

BIFIE was also put in charge of implementing a new high-stakes Matura exam for the end of the upper secondary level. This meant creating standardised tests for all the subjects, including Mathematics, for which written examinations were prescribed. Thus, for the language subjects, standardised exams had to be developed for testing achievement in the language of instruction, predominantly German but also Hungarian, Croatian and Slovene, for the so-called modern languages, namely English, French, Italian and Spanish, and for the classical languages Ancient Greek and Latin. This monumental task was undertaken jointly by BIFIE and the universities of Innsbruck, Klagenfurt, Vienna and external consultants. Exam development for the modern languages was spearheaded by a project started by the University of Innsbruck with the support by external consultants from Great Britain. This complex and demanding task involved creating tests for Listening, Reading, Writing and Language in Use for English, French, Italian and Spanish at different levels for candidates with eight, six and four years of instruction in different languages, respectively. All these exams were linked to the CEFR. The development of the exams for German, Hungarian, Croatian and Slovene as first languages or languages of instruction as well as the development of the exams for the classical languages were supported by expertise from the universities of Klagenfurt, Vienna and external consultants from Germany and Switzerland. Unlike in the case of the modern languages, these exams could not be linked to an external scale like the CEFR because such scales are not available. Struger describes the theoretical bases underlying the written exam for German as a language of instruction and their operationalisation. The field testing of a large number of tasks developed according to these principles is described by Cesnik and Sigott. Spöttl, Eberharter, Holzknecht, Kremmel and Zehentner provide an account of the process and challenges of implementing competence-based assessment for the modern languages English, French, Italian and Spanish while Weiler and Frötscher describe exam maintenance and quality control procedures for the exam now that it has been implemented. The way the new exam philosophy has been adopted in the classical languages Latin and Greek is described by F. Lošek, Glatz, Niedermayr and Weyrich-Zak, and selected aspects of its implementation, including washback effects, are discussed by Sörös, Seitz, Glas, Kuchling, Oswald and A. Lošek. ← 14 | 15 →

At university exit level, a group of university language teachers from the universities of Graz, Innsbruck, Klagenfurt, Salzburg and Vienna constituted the English Language Testing and Teaching Initiative (ELTT), led by the Klagenfurt Language Testing Centre. This initiative aimed at formulating level descriptors for assessing achievement in English writing and speaking. These were intended for assessing students majoring in English language and students in the English teacher training programmes at the end of their university language training. Analytic rating scales were developed on the basis of the CEFR in a combination of theory-based and empirical approaches and benchmarked performances were made available. Unlike in the case of the educational standards and Matura, the use of these scales is not compulsory, but it is up to the individual university department to decide whether or not to use them. These developments are described by Berger and Heaney.

Finally, the testing of German as a Foreign or Second language at all six levels of the CEFR in the institutional framework of the Österreichisches Sprachdiplom (ÖSD) is described by Glaboniat and Peresich.

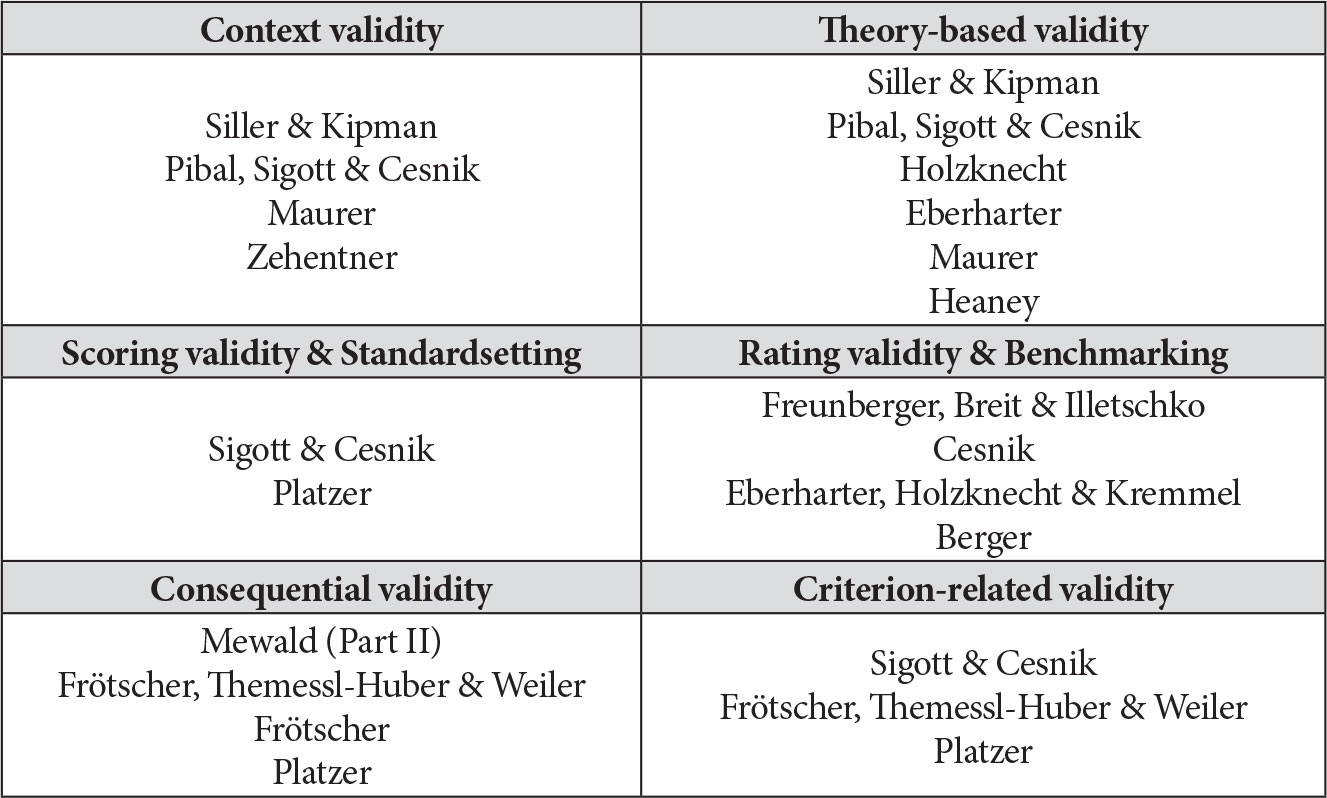

3. The research scenario

The existence of national tests and examination systems with candidatures between 25,000 and 80,000 has given rise to a considerable variety of research. This is remarkable given the short time that has elapsed since the examinations entered the operational phase. Research has been carried out on tests or examinations at all the levels of the educational system ranging from primary to university level. This research is presented in Part II. The contributions in Part II are also arranged according to the level in the educational system that they refer to. Within each level, the research studies are grouped according to an adapted version of Weir’s (2005) validity framework shown in Figure 1. This identifies six aspects of validity, which interact with each other and also overlap. In a tentative spirit, the contributions have been entered into the individual boxes according to the editor’s interpretation of the original framework as well as the editor’s perception of the main focus of each study. The allocation of studies to the individual aspects of validity is not intended to be prescriptive in any sense but is meant to aid the reader’s orientation throughout the volume. ← 15 | 16 →

Fig. 1: Mapping of research foci against Weir’s validity framework (based on Weir, 2005, pp. 44–47)

Context validity refers to all facets of the test context from physical features like background noise or lighting to expectations held by stakeholders, which includes criteria for correctness. Theory-based validity is a cover term for the aspects of the test construct that are accessed by the test, particularly the cognitive processes that are triggered by the test tasks. Scoring validity and standardsetting relates to the process of translating test performance into numerical format for item-based tests while rating validity and benchmarking refers to the analogous process for tests of the productive skills. Criterion-related validity encompasses correlational approaches as well as attempts at relating test scores to well established published performance level descriptors, as is typically the case in attempts to link tests to the CEFR. Consequential validity refers to the effects that tests have for stakeholders. This includes effects on teaching, learning, and formative as well as summative assessment practices in the classroom.

In the area of the educational standards, context validity, theory-based validity, scoring validity and standardsetting, rating validity and benchmarking, criterion-related validity as well as consequential validity have been addressed to different extents. Context validity and theory-based validity is addressed in a study by Siller and Kipman, who examine contextual and cognitive factors that account for differences in item difficulty in the E8 reading test. Pibal, Sigott and Cesnik have studied the role that language errors play in the rating of writing performances ← 16 | 17 → in the E8 writing baseline test, thus shedding light on criteria for correctness that are consciously or subconsciously applied by the raters. Problems of scoring validity and standardsetting are addressed in a study by Sigott and Cesnik, who, after describing approaches to standardsetting for the receptive skills in English, focus particularly on the discrepancies between empirical item difficulty and the difficulty estimates made by a standardsetting panel and ways of dealing with these discrepancies without asking panellists to negotiate agreement. Freunberger, Breit and Illetschko report on a study in the area of rating validity and benchmarking. They describe the rater training for the writing part of the German test at the end of primary school and document changes in rater behaviour from the training phase to operational testing with particular focus on rater severity and rater agreement. In particular, they identify texts with regard to which the raters tend to disagree and suggest research into features which are characteristic of such controversial texts. Cesnik focuses on an important methodological aspect of Multifacet Rasch Measurement, namely the algorithm for computing so-called fair measures, which are a frequently used statistic reported in studies of rating validity and benchmarking. Criterion-related validity is also addressed by Luger-Bazinger, Itzlinger-Bruneforth and Schreiner in Part I, who describe procedures for linking test scores for the receptive skills to level descriptors of the competence model for L1 German. Sigott and Cesnik (see above), describe and evaluate procedures for linking test scores for the receptive skills in English to the CEFR. Eventually, consequential validity is addressed by Mewald, who attempts to trace washback effects of the English standards tests at lower secondary level with regard to teaching, assessment practices as well as students’ attitudes and motivation to learn.

The research focusing on Matura addresses context validity, theory-based validity, rating validity and benchmarking, criterion-related validity as well as consequential validity. In the area of context validity, Maurer investigates linguistic features characteristic of blogs, a new text type among the text types required in the writing component. An important aim of this research is to contribute towards concretising criteria for correctness that are applied in the rating process for this genre. Zehentner studies the effect of partner choice in the oral pair interview, which was introduced in 2012. The focus of interest is on whether students are able to choose partners in a way that is beneficial to their performance and to the subsequent rating. His study is exceptional in that it concerns the oral component of Matura, which to date has not been standardised to the extent the written components are. Three studies have a focus on theory-based validity. Holzknecht investigates the reading strategies that are triggered in test takers of the reading ← 17 | 18 → component of Matura by means of think-aloud protocols and compares them to experts’ judgments as to what individual items are testing. In an analogous study for the language in context component, Eberharter probes into the thought processes activated in test takers when processing the multiple-choice items of this component and relates them to expert judgments of item focus. Maurer’s study (see above) also touches upon theory-based validity by identifying aspects of competence that are employed in the process of writing blogs as a task type in the writing component. The study by Eberharter, Holzknecht and Kremmel is highly relevant in the context of rating validity and benchmarking. They report on the effectiveness of a training programme for raters who double-rate writing performances for which this is deemed useful or for which this is required by the exam regulations. Their focus is on identifying facets of the training programme that are crucial in maximising rater consistency. Aspects of criterion-related validity are addressed by Frötscher, Themessl-Huber and Weiler. They report on the results of the 2016 Matura and focus on the effect of gender, school type, region, level of urbanity and years of instruction for French, Italian and Spanish, thus investigating potential test bias. By comparing the CEFR levels reached by the candidature to the stipulations of the individual curricula, they also touch upon washback, thus contributing evidence of consequential validity. Frötscher’s study addresses the issue of washback more directly by investigating in detail the effect of the new Matura exam on the testing of reading in summative classroom tests. In contrast to Mewald (see above), who investigates the washback of the national standards tests, Frötscher finds clear evidence of washback of the new Matura exam. Aspects of washback in the classical languages are also described in the contribution by Sörös et al. in Part I, who report on developments in textbooks, assessment and in-service teacher training as a consequence of the new Matura exam.

The language testing research on assessment tools at university exit level is focused on rating validity and benchmarking, theory-based validity and on criterion-related validity. In the area of rating validity and benchmarking Berger reports on a series of studies aimed at providing validity evidence with regard to the ELTT speaking scales for presentations and interaction. He investigates the proficiency continuum posited by the scales using a multi-method approach that involves descriptor sorting, descriptor calibration and descriptor-performance matching. On the basis of the results, he suggests two modified versions of the ELTT scales and, more generally, detail to be added to the descriptors for the CEFR levels C1 and C2. Heaney’s study deals with expeditious reading and refers primarily to theory-based validity. She suggests a definition for the construct of speed reading, a much-neglected aspect in the testing of reading. Based on a ← 18 | 19 → review of the relevant literature, she suggests ways of operationalising expeditious reading strategies, describes a prototype version of a test of expeditious reading, and identifies directions for further research into operationalising expeditious reading strategies. Platzer’s contribution has a focus in criterion-related validity but also deals with aspects of scoring validity and standardsetting, and of consequential validity. He examines issues of validity in vocabulary testing at tertiary level using criterion-referenced rather than norm-referenced methods.

The contributions to this volume show that not only has there been considerable progress in test development but that research activities are gaining momentum. In fact, a variety of research directions are being pursued, focusing on testing at all levels in the educational system. Further research is needed in the areas of washback, rating scale validation, cognitive strategy use and genre-specific features of the text types required in writing. A central area that will need more attention in the future is research into causes of differences in task or item difficulty in all languages tested. Studies along this line will need to be widened in scope to include a wider range of contextual and cognitive features, and to also include the productive skills, particularly writing. Here the focus will need to be on text features that predispose raters to award particular scale scores to writing performances. With reference to the test constructs of interest, the features studied could be categorised as those which are, and those which are not, supposed to affect task or item difficulty. The empirical data will then make it possible to determine the extent to which the test developers’ intentions have been realised in the test tasks or items. While this kind of research is of obvious relevance for test validation, it is equally relevant for standardsetting. The better the members of standardsetting panels understand and perceive factors which determine item difficulty, the more agreement there will be among panellists but also, and more importantly, between the panellists’ difficulty estimates and the empirical task or item difficulties. Research along these lines also has obvious relevance for teaching. The more is known about features affecting task and item difficulty, the better we understand the nature of the constructs, and, importantly, the nature of the competences which constitute the teaching goals. In fact, this kind of research could serve as the hub for future studies as it connects with most validation research in a more or less direct way.

References

Lucyshyn, J. (2004). Implementierung von Bildungsstandards in Österreich. Erziehung und Unterricht 7-8 (154. Jg.), S. 613–617. ← 19 | 20 →

Lucyshyn, J. (2007). Bildungsstandards in Österreich – Entwicklung und Implementierung: Pilotphase 2 (2004–2007). Erziehung und Unterricht 7-8 (157. Jg.), S. 566–587.

Sigott, G. (2007). A case for language testing. In M. Reitbauer, N. Campbell, S. Mercer & R. Vaupetitsch (Eds.), Contexts of English in Use (pp. 159–166). Vienna: Braumüller.

Weir, C. J. (2005). Language Testing and Validation: An Evidence-Based Approach. Basingstoke: Palgrave Macmillan.

* Address for correspondence: Günther Sigott, Alpen-Adria-Universität Klagenfurt, guenther.sigott@aau.at.

I.1 National Educational Standards Tests

Sandra Eibl, Silke Schwaiger & Simone Breit*

Bildungsstandardüberprüfung Deutsch: Kompetenzmodelle und Aufgabenentwicklung

Educational standards and standards testing in Austria aim to further improve educational quality in schools and to enhance teaching. These standards define which competencies pupils should have at the end of the 4th and the 8th school year. On behalf of the Austrian Federal Ministry of Education the Federal Institute for Educational Research, Innovation and Development of the Austrian School Sector (BIFIE) is in charge of further developing, implementing and the monitoring educational standards in Austria. This section focuses on German standards testing in Austria. It illustrates the principles of standards testing from the development of competence models, which are a crucial foundation of educational standards besides curricula, through the production of test items within various fields of competencies and their psychometric analyses to the use of these items in testing.

1. Einleitung

Bildungsstandards legen fest, über welche Kompetenzen Schüler am Ende der 4. bzw. 8. Schulstufe verfügen sollen. Sie haben Orientierungs-, Förderungs- und Evaluationsfunktion (BGB1.II, Nr 1/2009; Schreiner & Breit, 2016, S. 2f.) und nehmen eine zentrale Funktion für die Weiterentwicklung von Schule und Unterricht ein (Klieme & al., 2003, S. 47ff.). Die Entwicklung der Bildungsstandards wurde im deutschsprachigen Raum maßgeblich durch die Expertise einer Expertengruppe unter der Leitung von Eckhard Klieme (Klieme & al., 2003) beeinflusst (George, Süss-Stepancik, Illetschko & Wiesner, 2016, S. 68). Rechtliche Grundlage für die Einführung von Bildungsstandards im österreichischen Schulwesen bildet eine im Jänner 2009 vom Bundesministerium für Unterricht, Kunst und Kultur (früher BMUKK, heute BMB Bundesministerium für Bildung) erlassene Verordnung. Diese beschreibt den Geltungsbereich, erläutert Begriffsbestimmungen und die Funktionen der Bildungsstandards und beinhaltet die Bildungsstandards für die 4. und 8. Schulstufe. Ebenso regelt die Verordnung, dass ab dem Schuljahr 2011/12 für die 8. Schulstufe und ab dem Schuljahr 2012/13 für die 4. Schulstufe durch periodische Standardüberprüfungen der Grad der Kompetenzerreichung durch die Schülerinnen objektiv und durch validierte ← 23 | 24 → Aufgabenstellungen festzustellen und mit den angestrebten Lernergebnissen zu vergleichen ist (BGB1.II, Nr 1/2009).

Bildungsstandards formulieren Bildungsziele und sind als normative Regelstandards definiert, d.h. sie beschreiben Kompetenzen, die im Durchschnitt bzw. in der Regel von den Schülern an einem bestimmten Zeitpunkt in ihrer Schullaufbahn beherrscht werden sollten. Sie sind also kompetenztheoretisch begründet und werden als spezifische Leistungserwartungen verstanden. Sie haben die wichtige Aufgabe, Kompetenzen zu benennen, die von den Schülerinnen erworben werden sollen, um Bildungsziele zu erreichen (Klieme & al., 2003, S. 71).

Der Begriff der Kompetenz im Kontext der österreichischen Bildungsstandards orientiert sich an Weinert, der diese definiert als „bei Individuen verfügbare[n] oder durch sie erlernbare[n] kognitive[n] Fähigkeiten und Fertigkeiten, um bestimmte Probleme zu lösen, sowie die damit verbundenen motivationalen, volitionalen und sozialen Bereitschaften und Fähigkeiten um die Problemlösungen in variablen Situationen erfolgreich und verantwortungsvoll nutzen zu können“ (Weinert, 2002, S. 27–28). Die österreichischen Bildungsstandards versuchen Weinerts Kompetenzbegriff in all seinen unterschiedlichen Facetten abzudecken: Im Rahmen der Kompetenzmessung werden kognitive Fähigkeiten und Fertigkeiten der Schüler überprüft. Zusätzlich werden soziale Aspekte des Lernens wie die von Weinert angesprochene motivationale und volitionale Bereitschaft in Form eines Kontextfragebogens erfasst.

Die jüngere Forschung nimmt eine stärkere Fokussierung bzw. Kontextualisierung des Kompetenzbegriffs vor. Im Rahmen des DFG-Schwerpunktprogramms „Kompetenzmodelle zur Erfassung individueller Lernergebnisse und zur Bilanzierung von Bildungsprozessen” (Laufzeit: 2007–2013), initiiert von Detlev Leutner und Eckhard Klieme, werden Kompetenzen als „kontextspezifische kognitive Leistungsdispositionen“ [Kursiv im Original] gefasst (Klieme & Leutner, 2006, S. 879; DFG-Schwerpunktprogramm 2017). Auf diese Definition beziehen sich v.a. jüngere Forschungsarbeiten, die sich mit Kompetenzkonzepten auseinandersetzen (Böhme & al., 2017, S. 55).

Der vorliegende Beitrag gibt nun einen ersten einführenden Überblick in die Grundlagen und Prozesse der österreichischen Bildungsstandards für das Fach Deutsch. Er beschreibt den Weg von den Grundlagen der Entwicklung der Bildungsstandards, von den Kompetenzmodellen, über die testtheoretischen Grundlagen, die Erstellung der einzelnen Testaufgaben und deren psychometrische Erprobung bis hin zur Entwicklung des Tests im Gesamten für die Deutsch-Bildungsstandardüberprüfung. ← 24 | 25 →

2. Kompetenzmodelle als Grundlage für die Entwicklung von Bildungsstandards

Die Grundlagen für die Entwicklung der Bildungsstandards bilden neben den Lehrplänen der jeweiligen Schulart und Schulstufe sogenannte Kompetenzmodelle (BIFIE, 2017a; siehe Abbildungen 3 und 5). Die Forschungsliteratur unterscheidet unterschiedliche Ausprägungen und Varianten von Kompetenzmodellen, nämlich Kompetenzstrukturmodelle (a), Kompetenzniveau- bzw. Kompetenzstufenmodelle (b) sowie Kompetenzentwicklungsmodelle (c) (Böhme & al., 2017, S. 55–58; Klieme & Leutner, 2006, S. 883–885). Kompetenzstrukturmodelle (a) beschäftigen sich „mit der Frage, welche und wie viele verschiedene Kompetenzdimensionen in einem spezifischen Bereich differenzierbar sind” (Klieme & Leutner, 2006, S. 883). Kompetenzniveau- bzw. -stufenmodelle (b) hingegen beschreiben, „bei welcher in Stufen graduierten Ausprägung einer Kompetenz Schülerinnen und Schüler welche konkreten kognitiven und sprachlichen Anforderungen im Regelfall bewältigen können“ (Böhme & al., 2017, S. 55f.; Klieme & Leutner, 2006, S. 883). Im Vergleich zu diesen Varianten von Kompetenzmodellen sind Kompetenzentwicklungsmodelle (c) differenzieller und umfassender, denn sie „können sowohl Entwicklungsverläufe abbilden als auch die Veränderungen von Kompetenzstrukturen über die Zeit beschreiben“ (Böhme & al., 2017, S. 56).

Die Kompetenzmodelle Deutsch für die 4. bzw. 8. Schulstufe können eine solche Entwicklung von Kompetenzen nicht fassen. Sie entsprechen vielmehr Kompetenzstrukturmodellen und lehnen sich an die Lehrpläne der jeweiligen Schulstufe an. Darüber hinaus finden sich Parallelen zu Ossners (2006; 2008) Modell, der für die Deutschdidaktik ein Kompetenzmodell entwickelte und damit die Bereiche des Faches aufgliederte. Seine Ausführungen sind zentral für die Fachdidaktik. Becker-Mrotzek und Schindler (2007, S. 7–26) beispielsweise entwickelten ihr Kompetenzmodell für den Bereich Schreiben in Anlehnung an Ossner. Darüber hinaus verweisen auch die Konstruktbeschreibungen für die 4. bzw. die 8. Schulstufe1 wiederholt auf Ossners Modell, vor dessen Hintergrund die Kompetenzmodelle der Grundstufe bzw. der Sekundarstufe I dargelegt werden. ← 25 | 26 →

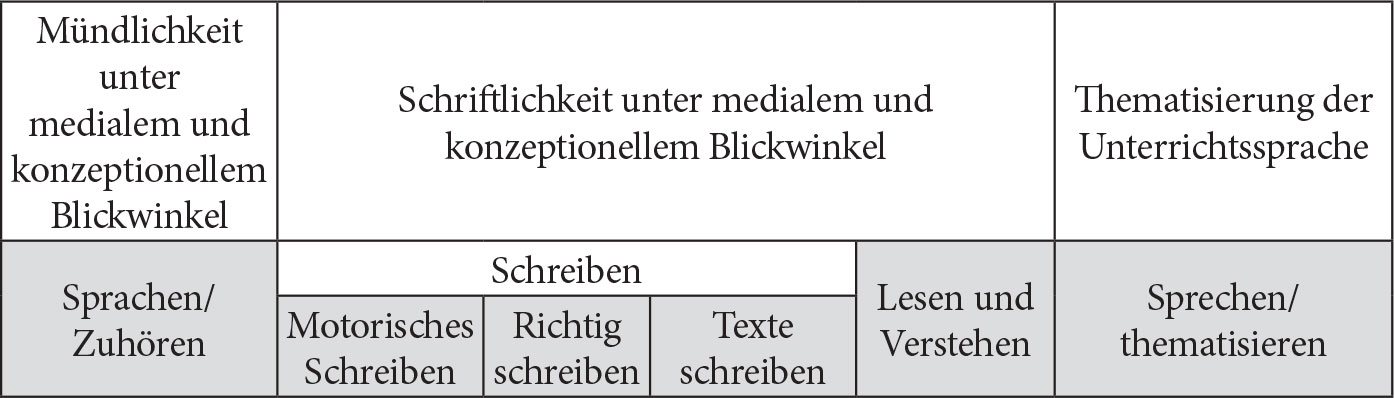

Im Folgenden soll das Kompetenzmodell nach Ossner skizziert werden, um ein besseres Verständnis der Kompetenzmodelle im Bereich Deutsch zu erreichen sowie, um Möglichkeiten der weiteren Entwicklung der Modelle aufzuzeigen. Ossner geht zunächst von einem analytischen Modell der Arbeitsbereiche des Deutschunterrichts aus (Ossner, 2008, S. 41–44; Ossner, 2006, S. 9). Dieses gliedert sich in drei Handlungsfelder: Mündlichkeit unter medialem und konzeptionellem Blickwinkel, Schriftlichkeit unter medialem und konzeptionellem Blickwinkel sowie Thematisierung der Unterrichtssprache (Ossner, 2006, S. 9).2 Diese drei Felder werden weiter konkretisiert und differenziert in die Unterbereiche Sprechen/Zuhören, Motorisches Schreiben, Richtig schreiben, Texte schreiben, Lesen und Verstehen sowie Sprache thematisieren.

Abb. 1: Analytisches Modell der Arbeitsbereiche (Ossner, 2006, S. 9)

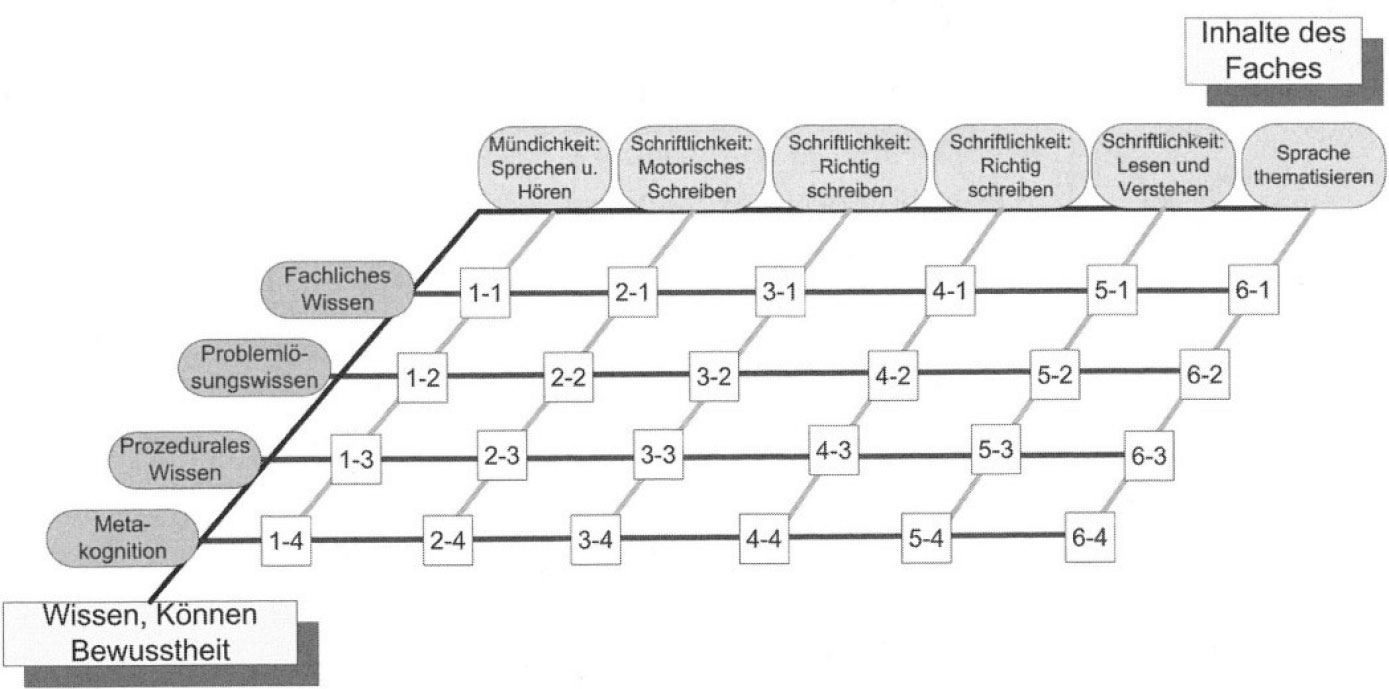

Diese sechs Bereiche spiegeln die Inhalte des Faches Deutsch wider und bilden die x-Achse von Ossners Grundmodell (siehe Abb. 2). Auf der y-Achse finden sich Wissensarten aus der Pädagogischen Psychologie, die die Inhaltsbereiche des Faches nochmals ausdifferenzieren. Ossner orientiert sich dabei an den Wissensarten nach Mandl. Dieser unterscheidet vier Ausprägungen, die er den Bereichen Wissen, Können und Bewusstheit zuordnet: Deklaratives Wissen, d.h. Fachliches Wissen, Problemlösungswissen, Prozedurales Wissen und Metakognitives Wissen (Mandl & al., 1986 zit. n. Ossner, 2008, S. 32). In Ossners Ausführungen ist der Begriff des Wissens aus der Pädagogischen Psychologie und weniger jener des ← 26 | 27 → Könnens zentral. Damit unterscheidet er sich „bereits auf konzeptioneller Ebene vom Kompetenzbegriff der empirischen Bildungsforschung“ (Böhme & al., 2017, S. 56f.).

Abb. 2: Kompetenzmodell (Grundmodell) (Ossner, 2008, S. 45)

In Ossners Grundmodell (siehe Abb. 2) kreuzen sich Inhaltsebene des Faches Deutsch (= Kompetenzinhalte) mit den Wissensarten (= Kompetenzdimensionen) und bilden eine Matrix, bestehend aus 24 Punkten. Diese erlauben nun Überlegungen anzustellen, über welches Wissen Schüler verfügen (sollten); z.B. normativ gesprochen: Über welches Fachliche Wissen sollten Schülerinnen im Bereich Mündlichkeit (1-1) oder im Bereich Sprachthematisierung verfügen (6-1)? Ossner bleibt hier jedoch noch nicht stehen, sondern entwickelt sein Grundmodell weiter. In einem nächsten Schritt gewichtet er das Kompetenzmodell, d.h. die Punkte bzw. Kompetenzen der Matrix bekommen eine unterschiedliche Wertigkeit zugeschrieben (Ossner, 2006, S. 13). Um beim bereits angeführten Beispiel zu bleiben, hieße dies, dass Fachliches Wissen im Bereich Sprachthematisierung eine höhere Wertigkeit erhält als etwa Fachliches Wissen im Bereich Mündlichkeit. Neben der Gewichtung ist auch eine Erweiterung des Grundmodells hinsichtlich Entwicklungs- und Anforderungsstufen möglich, die einen differenzierteren Einblick in Kompetenzentwicklungsverläufe und -stufen geben können (Ossner, 2008, S. 46; Ossner, 2006, S. 13–15).

Die Kompetenzmodelle für die Schulstufen Deutsch 4 und 8 lehnen sich, was die unterschiedlichen Handlungsfelder bzw. Arbeitsbereiche des Faches Deutsch betrifft, an Ossners Grundmodell an, sie sind jedoch weniger differenziert und ← 27 | 28 → zielen auch nicht darauf ab, Entwicklungsverläufe darzustellen. Ossner selbst definiert in seinem weiterentwickelten Modell bereits unterschiedliche Anforderungsstufen (Ossner, 2008, S. 46). Diese sind nicht Teil der österreichischen Kompetenzstrukturmodelle in Deutsch, sondern wurden erst später im Rahmen eines Standardsetting-Verfahrens festgelegt (siehe Abschnitt 3).

Details

- Pages

- 742

- Publication Year

- 2018

- ISBN (PDF)

- 9783631759738

- ISBN (ePUB)

- 9783631759745

- ISBN (MOBI)

- 9783631759752

- ISBN (Hardcover)

- 9783631749388

- DOI

- 10.3726/b14338

- Language

- English

- Publication date

- 2018 (October)

- Keywords

- Sprachtestentwicklung / Language test development Bildungsstandards / National educational standards testsv Zentralmatura / National school-leaving examination Sprachtesten im tertiären Bildungsbereich /Tertiary-level language testing Sprachtestforschung / Language testing research Validierung / Validation Klassische Testtheorie / Classical test theory Probabilistische Testtheorie / Probabilistic test theory

- Published

- Berlin, Bern, Bruxelles, New York, Oxford, Warszawa, Wien. 2018. 102 b/w ill., 167 b/w tab.

- Product Safety

- Peter Lang Group AG